Publications

A collection of my research work.

L4: Low-Latency and Load-Balanced LLM Serving via Length-Aware Scheduling

Yitao Yuan, Chenqi Zhao, Bohan Zhao, Zane Cao, Yongchao He, Wenfei Wu

2025

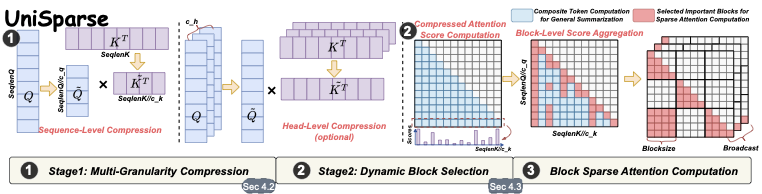

A Unified Sparse Attention via Multi-Granularity Compression

Siran Liu, Zane Cao, Yongchao He

2025

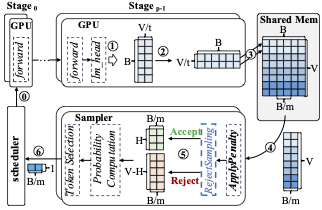

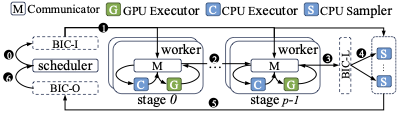

SIMPLE: Disaggregating Sampling from GPU Inference into a Decision Plane for Faster Distributed LLM Serving

Bohan Zhao, Zane Cao, Yongchao He

2025

MegatronApp: Efficient and Comprehensive Management on Distributed LLM Training

Bohan Zhao, Guang Yang, Shuo Chen, Ruitao Liu, Tingrui Zhang, Yongchao He, Wei Xu

2025

SiPipe: Bridging the CPU-GPU Utilization Gap for Efficient Pipeline-Parallel LLM Inference

Yongchao He, Bohan Zhao, Zheng Cao

2025

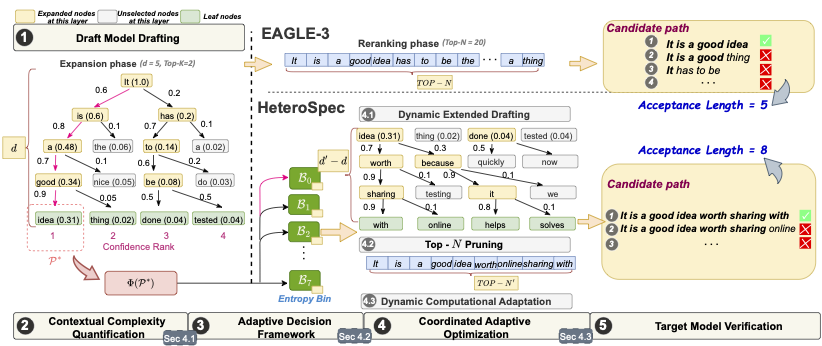

HeteroSpec: Leveraging Contextual Heterogeneity for Efficient Speculative Decoding

Siran Liu, Yang Ye, Qianchao Zhu, Zane Cao, Yongchao He

2025